使用 Microsoft Olive 构建您的项目

如果企业想要拥有自己的行业垂直模型,需要从数据、微调、部署开始。在之前的内容中,我们介绍了 Microsoft Olive 的内容,现在我们基于 E2E 的工作进行更详细的介绍。

Microsoft Olive 配置

如果您想了解 Microsoft Olive 的配置,请访问 使用 Microsoft Olive 进行微调。

注意 为了保持最新,请使用以下命令安装 Microsoft Olive:

pip install git+https://github.com/microsoft/Olive -U

在本地计算环境中运行 Microsoft Olive

LoRA

这个示例使用本地计算、本地数据集,并在微调文件夹中添加 olive.config

{

"input_model":{

"type": "PyTorchModel",

"config": {

"hf_config": {

"model_name": "../models/microsoft/Phi-3-mini-4k-instruct",

"task": "text-generation",

"from_pretrained_args": {

"trust_remote_code": true

}

}

}

},

"systems": {

"local_system": {

"type": "LocalSystem",

"config": {

"accelerators": [

{

"device": "GPU",

"execution_providers": [

"CUDAExecutionProvider"

]

}

]

}

}

},

"data_configs": [

{

"name": "dataset_default_train",

"type": "HuggingfaceContainer",

"params_config": {

"data_name": "json",

"data_files":"../datasets/datasets.json",

"split": "train",

"component_kwargs": {

"pre_process_data": {

"dataset_type": "corpus",

"text_cols": [

"INSTRUCTION",

"RESPONSE",

"SOURCE"

],

"text_template": "<|user|>\n{INSTRUCTION}<|end|>\n<|assistant|>\n{RESPONSE}

\n( source : {SOURCE})<|end|>",

"corpus_strategy": "join",

"source_max_len": 2048,

"pad_to_max_len": false,

"use_attention_mask": false

}

}

}

}

],

"passes": {

"lora": {

"type": "LoRA",

"config": {

"target_modules": [

"o_proj",

"qkv_proj"

],

"double_quant": true,

"lora_r": 64,

"lora_alpha": 64,

"lora_dropout": 0.1,

"train_data_config": "dataset_default_train",

"eval_dataset_size": 0.3,

"training_args": {

"seed": 0,

"data_seed": 42,

"per_device_train_batch_size": 1,

"per_device_eval_batch_size": 1,

"gradient_accumulation_steps": 4,

"gradient_checkpointing": false,

"learning_rate": 0.0001,

"num_train_epochs": 5,

"max_steps": 10,

"logging_steps": 10,

"evaluation_strategy": "steps",

"eval_steps": 187,

"group_by_length": true,

"adam_beta2": 0.999,

"max_grad_norm": 0.3

}

}

},

"merge_adapter_weights": {

"type": "MergeAdapterWeights"

},

"builder": {

"type": "ModelBuilder",

"config": {

"precision": "int4"

}

}

},

"engine": {

"log_severity_level": 0,

"host": "local_system",

"target": "local_system",

"search_strategy": false,

"cache_dir": "cache",

"output_dir" : "../models-cache/microsoft/phi3-mini-finetuned"

}

}

QLoRA

{

"input_model":{

"type": "PyTorchModel",

"config": {

"hf_config": {

"model_name": "../models/microsoft/Phi-3-mini-4k-instruct",

"task": "text-generation",

"from_pretrained_args": {

"trust_remote_code": true

}

}

}

},

"systems": {

"local_system": {

"type": "LocalSystem",

"config": {

"accelerators": [

{

"device": "GPU",

"execution_providers": [

"CUDAExecutionProvider"

]

}

]

}

}

},

"data_configs": [

{

"name": "dataset_default_train",

"type": "HuggingfaceContainer",

"params_config": {

"data_name": "json",

"data_files":"../datasets/datasets.json",

"split": "train",

"component_kwargs": {

"pre_process_data": {

"dataset_type": "corpus",

"text_cols": [

"INSTRUCTION",

"RESPONSE",

"SOURCE"

],

"text_template": "<|user|>\n{INSTRUCTION}<|end|>\n<|assistant|>\n{RESPONSE}

\n( source : {SOURCE})<|end|>",

"corpus_strategy": "join",

"source_max_len": 2048,

"pad_to_max_len": false,

"use_attention_mask": false

}

}

}

}

],

"passes": {

"qlora": {

"type": "QLoRA",

"config": {

"compute_dtype": "bfloat16",

"quant_type": "nf4",

"double_quant": true,

"lora_r": 64,

"lora_alpha": 64,

"lora_dropout": 0.1,

"train_data_config": "dataset_default_train",

"eval_dataset_size": 0.3,

"training_args": {

"seed": 0,

"data_seed": 42,

"per_device_train_batch_size": 1,

"per_device_eval_batch_size": 1,

"gradient_accumulation_steps": 4,

"gradient_checkpointing": false,

"learning_rate": 0.0001,

"num_train_epochs": 3,

"max_steps": 10,

"logging_steps": 10,

"evaluation_strategy": "steps",

"eval_steps": 187,

"group_by_length": true,

"adam_beta2": 0.999,

"max_grad_norm": 0.3

}

}

},

"merge_adapter_weights": {

"type": "MergeAdapterWeights"

},

"builder": {

"type": "ModelBuilder",

"config": {

"precision": "int4"

}

}

},

"engine": {

"log_severity_level": 0,

"host": "local_system",

"target": "local_system",

"search_strategy": false,

"cache_dir": "cache",

"output_dir" : "../models-cache/microsoft/phi3-mini-finetuned"

}

}

通知

-

如果您使用QLoRA,目前尚不支持ONNXRuntime-genai的量化转换。

-

这里需要指出的是,您可以根据自己的需求设置上述步骤。没有必要完全配置上述这些步骤。根据您的需求,您可以直接使用算法的步骤而无需微调。最后,您需要配置相关的引擎。

运行Microsoft Olive

完成Microsoft Olive后,您需要在终端运行以下命令:

olive run --config olive-config.json

通知

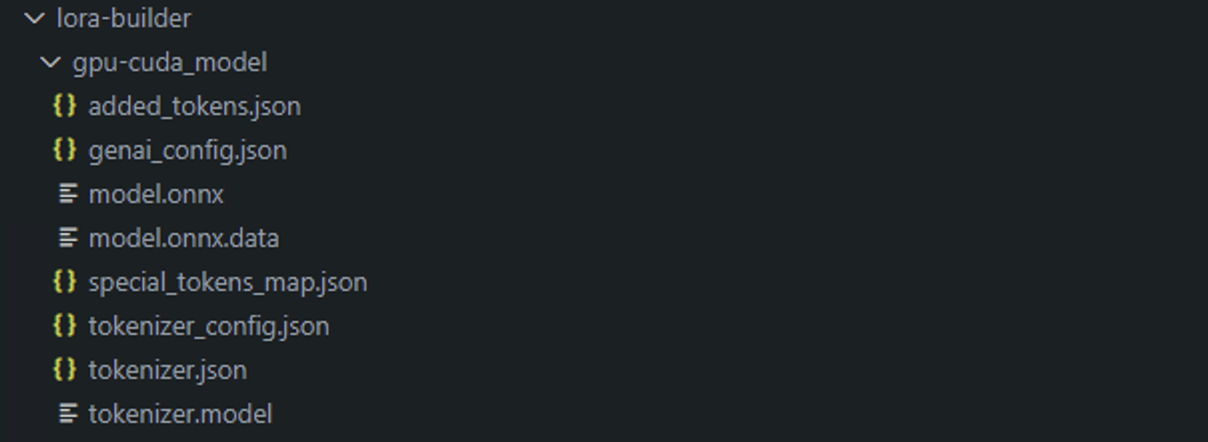

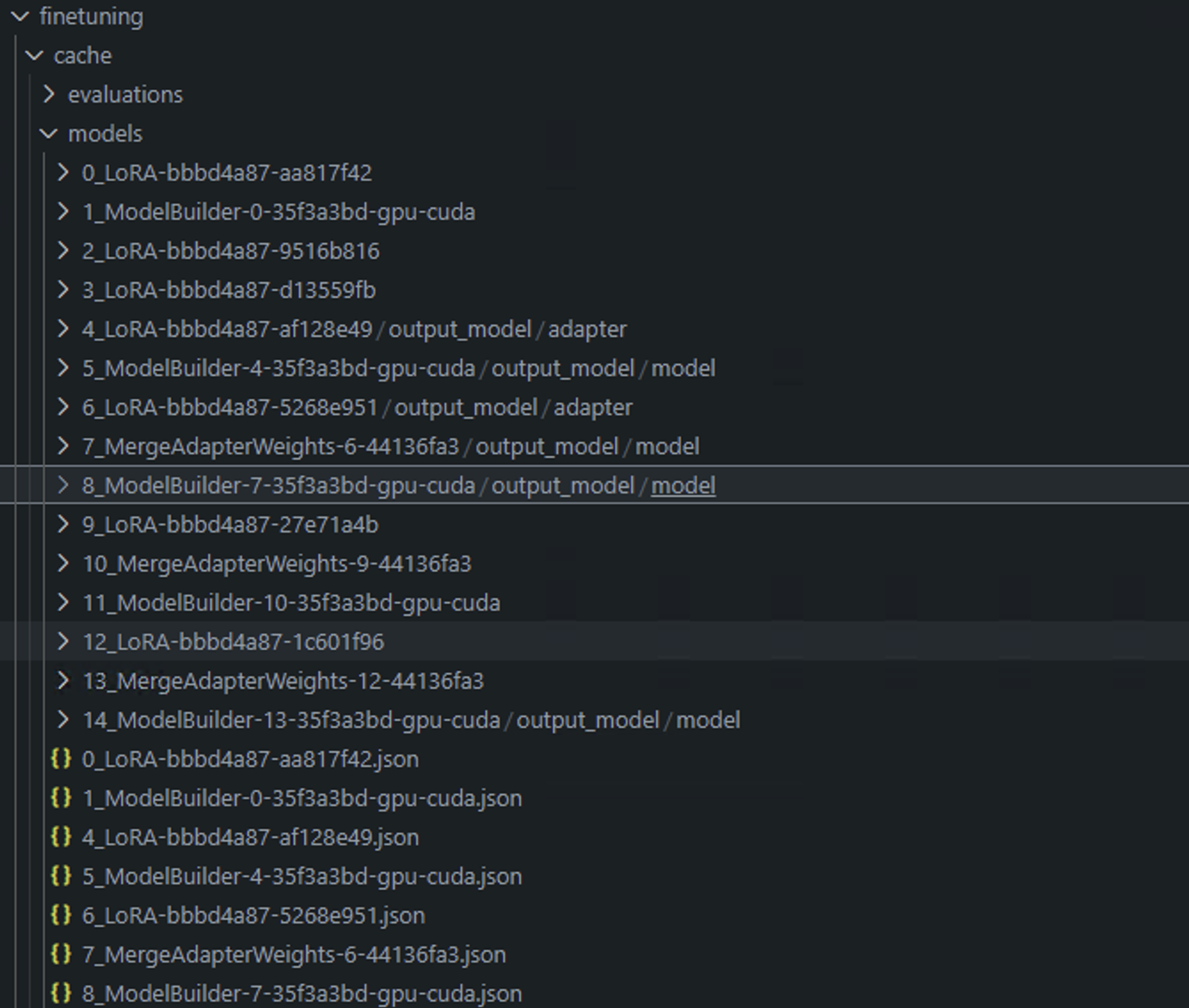

- 当执行Microsoft Olive时,每一步都可以被放入缓存中。我们可以通过查看微调目录来查看相关步骤的结果。

-

我们在这里提供了LoRA和QLoRA,您可以根据自己的需求进行设置。

-

推荐的运行环境是WSL/Ubuntu 22.04+。

-

为什么选择ORT?因为ORT可以部署在边缘设备上,在ORT环境中实现推理。