Using Phi-3 in Ollama

Ollama allows more people to directly deploy open source LLM or SLM through simple scripts, and can also build APIs to help local Copilot application scenarios.

1. Installation

Ollama supports running on Windows, macOS, and Linux. You can install Ollama through this link (https://ollama.com/download). After successful installation, you can directly use Ollama script to call Phi-3 through a terminal window. You can see all the available libaries in Ollama.

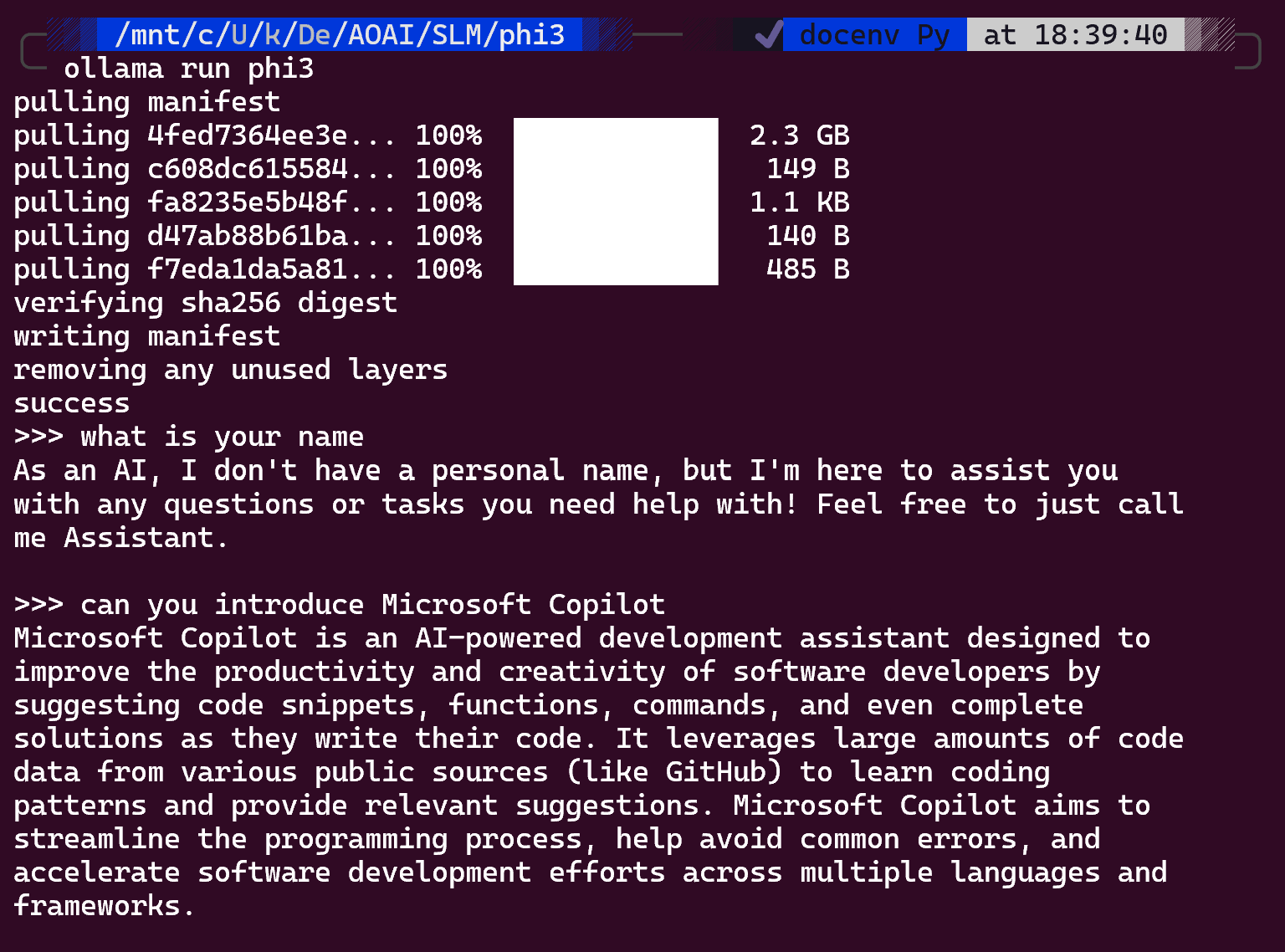

ollama run phi3

Note: The model will be downloaded first when you run it for the first time. Of course, you can also directly specify the downloaded Phi-3 model. We take WSL as an example to run the command. After the model is successfully downloaded, you can interact directly on the terminal.

2. Call the phi-3 API from Ollama

If you want to call the Phi-3 API generated by ollama, you can use this command in the terminal to start the Ollama server.

ollama serve

Note: If running MacOS or Linux, please note that you may encounter the following error "Error: listen tcp 127.0.0.1:11434: bind: address already in use" You may get this error when calling running the command. The solution for this problems is:

macOS

brew services restart ollama

Linux

sudo systemctl stop ollama

3. Export Ollama server

- Stop ollama serve

sudo systemctl stop ollama

- Modify ollama service file in location:

/etc/systemd/system/ollama.service,

sudo vim /etc/systemd/system/ollama.service

adding:

[Service]

ENVIRONMENTS="OLLAMA_HOST=0.0.0.0"

ENVIRONMENTS="OLLAMA_ORIGINS=*"

save and quit.

- Reload systemd service and restart ollama service

sudo systemctl deamon-reload

sudo systemctl restart ollama.service

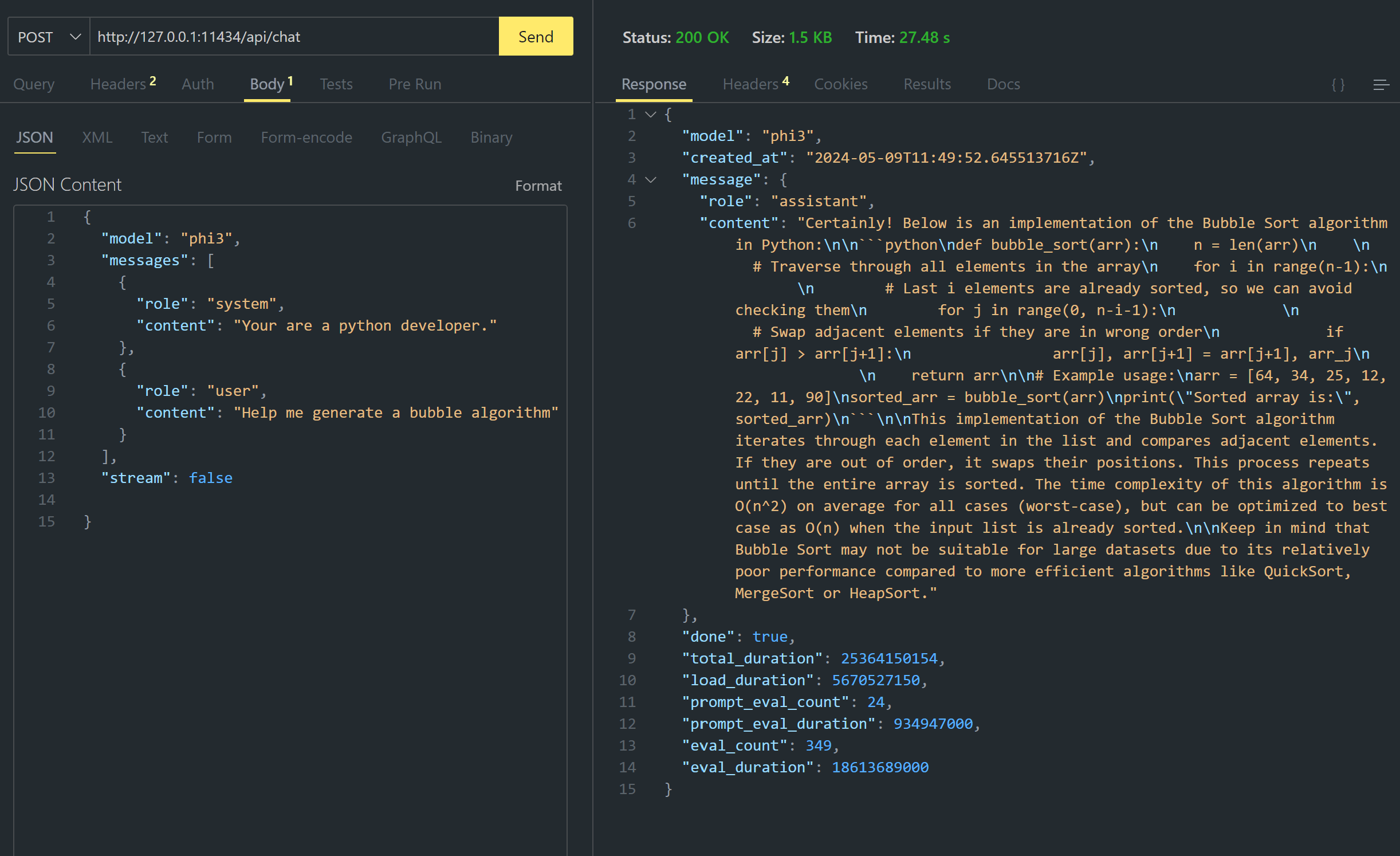

Ollama supports two API: generate and chat. You can call the model API provided by Ollama according to your needs. Local service port 11434. such as

Chat

curl http://127.0.0.1:11434/api/chat -d '{

"model": "phi3",

"messages": [

{

"role": "system",

"content": "Your are a python developer."

},

{

"role": "user",

"content": "Help me generate a bubble algorithm"

}

],

"stream": false

}'

curl http://127.0.0.1:11434/api/generate -d '{

"model": "phi3",

"prompt": "<|system|>Your are my AI assistant.<|end|><|user|>tell me how to learn AI<|end|><|assistant|>",

"stream": false

}'

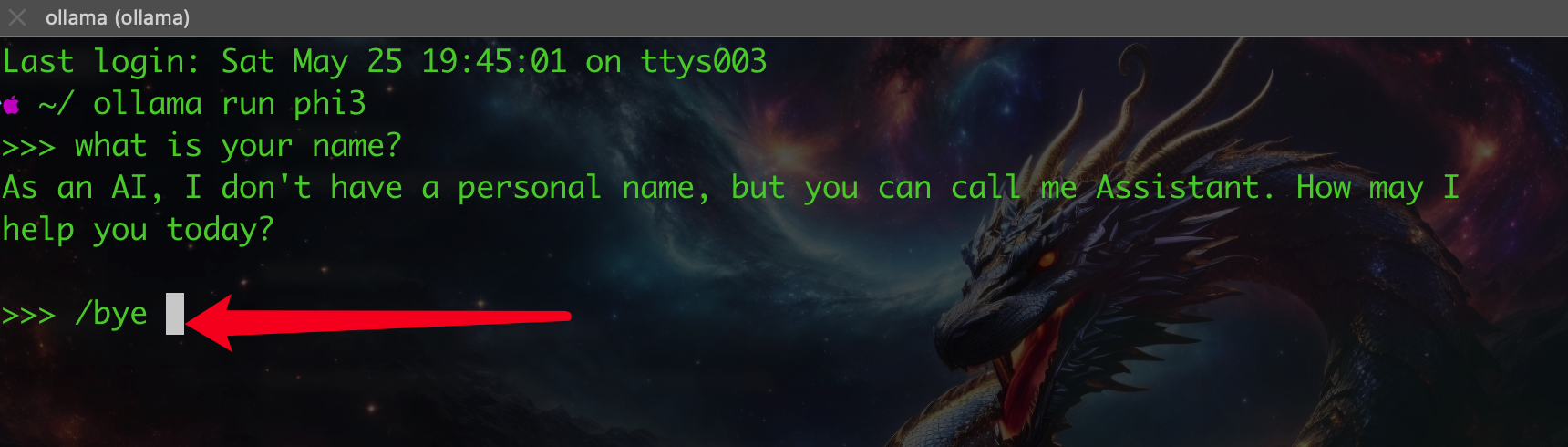

4. Quit from Ollama prompt

- Quit ollama by using

/byeor pressCtrl + Dto quit.

5. WebUI deployment

-

Install Nextchat as WebUI for Ollama API calling

-

Download URL: Nextchat

-

Configure following parameters as following figure:

- Start your AI journey have fun!